Ever since the initial blogpost of Zhamak Dehghani the idea of creating a decentralized data platform instead of a single central one has gained a lot of traction. The paradigm shift that this introduced, was triggered by the observation that while domain-driven design heavily influenced the way we design operational systems, central data platforms kept being developed as centralized monoliths. This article reviews two of the blogs written by Zhamak Dehghani as well as an on-demand webinar that covers the paradigm of a data mesh in-depth.

The Why, What, and How of the Data Mesh Paradigm

Recently, we shared some of our insights and thoughts about the data mesh paradigm in a webinar. Watch this on-demand webinar with Steven Nooijen, Guillermo Sánchez Dionis, and Niels Zeilemaker to:

- Learn what a data mesh is.

- Get insight into the three main architectural failure modes of a monolithic data platform and the required paradigm shift.

- Learn to point out the differences between operation and analytical data, as well as the differences in access patterns, use cases, personas of data users, and technology used to manage these datatypes.

- Gain an understanding of the characteristics of a successful data mesh.

- Become familiar with several critical questions to answer to successfully implement a data mesh.

Architectural Failure Modes of a Centralized Data Platform

Zhamak introduces the "why" of Data Mesh by describing the three main architectural failure modes of a monolithic data platform. The first failure mode is "centralized and monolithic", a single platform that needs to ingest data from all corners of the organization; convert that data into something trustworthy, and finally serve the data to a diverse set of consumers. Smaller organizations might make this work, but in larger organizations, the sheer amount of datasets and need for rapid experimentation will put huge pressure on the centralized data platform. A centralized and monolithic data platform

A centralized and monolithic data platform

The next failure mode is "coupled pipeline decomposition" wherein a single pipeline is split over multiple teams in order to make the development more scalable. While this allows for some scale, it will also slow delivery of new data, as the stages are highly coupled. And can only be worked on in a waterfall-like fashion.

And finally, "siloed and hyper-specialized ownership" describes teams of data platform engineers attempting to create usable data while having very little understanding of the source systems it is based on. In reality, this results in data platform teams being overstretched while consumers are fighting for the "top spot" on the backlog.

The Great Data Divide

In her second blog post, Zhamak additionally stresses the differences between operational and analytical data. The former is data that is being stored in databases backing operational systems (eg, microservices). The latter, data is used to give insight into the performance of the company over time. The gap between the two types of data is crossed with the use of ETL/ELT pipelines.

The differences between operation and analytical data also result in differences in access patterns, use cases, personas of data users, and technology used to manage these data types. However, this difference in technology used, should not lead to the separation of organization, teams, and people who work on them. As is the case in a centralized data platform.

The Paradigm Shift

By applying principles from Eric Evans's book Domain-Driven Design Zhamak introduced the Data Mesh concept in her first blog post. A Data Mesh is a decentralized data platform that keeps the ownership of data in the domains.

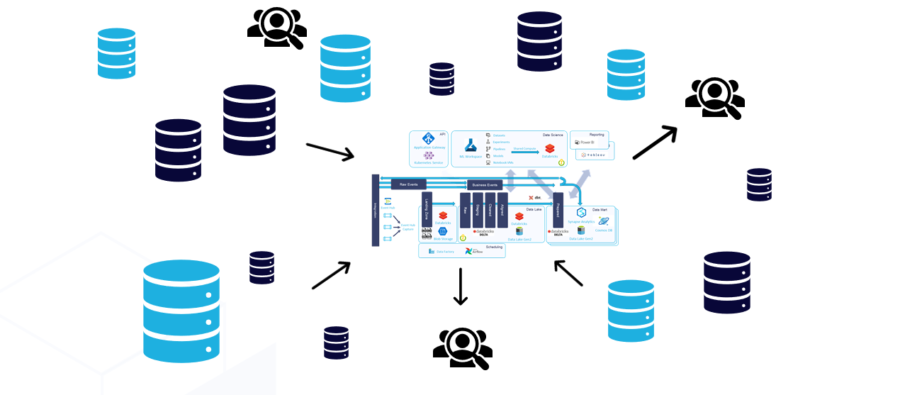

Zhamak describes this concept as the reverse of a centralized data platform. In which it's all about data locality and ownership. Data doesn't flow outside a domain into a centralized platform but is hosted and served by the domains themselves.

A Successful Data Mesh

In order to achieve any scale, Zhamak states that any Data Mesh implementation should implement four underpinning principles; 1) domain-oriented decentralized data ownership and architecture, 2) data as a product, 3) self-serve data infrastructure as a platform, and 4) federated computational governance. These principles should be considered as collectively necessary and sufficient.

Domain Ownership

As introduced before, Data Mesh is founded on the belief that domain ownership is crucial to support continuous change and scalability. Data Mesh achieves this by moving the responsibility to people which are closest to the data. By following the seams of the organization, Data Mesh, localizes the impact of changes to the domain.

In practice, this will result in domains not only providing an operational API, but also an analytical one. Zhamak gives an example of a podcasts domain that provides an API to "create a new podcast episode" (an operational API), but also an API that provides the number of listeners to a podcast over time (an analytical API). Both are maintained by the same domain/team.

Domain Data as a Product

In order for data to be considered a product, Zhamak outlines a few basic qualities each should implement. A data product should be discoverable, addressable, trustworthy, self-describing, inter-operable, and finally secure. Consumers of that data should be treated as customers.

A new role should be introduced by companies called "domain data product owner". Which is responsible for objective measures (KPIs) describing the performance of a data product. Eg, measuring the happiness of its customers. Using the KPIs, the Domain Data teams should strive to make their products the best they can be. With clear APIs, understandable documentation, and by closely tracking quality and adoption KPIs.

Architecturally, a data product is similar to a architectural quantum. Eg, the smallest unit which can be independently deployed. In order to achieve this, a data product is the combination of code, data and metadata, and infrastructure. And as such, in order to be a single deployable unit, a Data Mesh should enable teams with the capabilities to do so.

Self-Serve Data Platform

Leading to the self-serve data platform. A fair bit of infrastructure is required to run a data product. The knowledge required in order to build/maintain this infrastructure would difficult to replicate across all domains. Zhamak address this with a principle called the self-serve data platform.

You will find more analytics engineers in the market, than data engineers right now, even though they might not know they are analytics engineers.

Guillermo Sánchez Dionis

This self-serve data platform implements one or more platform planes, which end-users interact with in order to deploy their data product. Zhamak describes three planes; data infrastructure provisioning plane, data product developer experience plane, and the data mesh supervision plane. The infrastructure plane allows users to provision new infra. The data product plane implements standard solutions to run data products. Eq, allowing users to deploy a SQL query as a data product. The data mesh supervision plane provides global services. Eg, tools which allow users to discover new data products, which implement governance, data quality monitoring etc.

Federated Computational Governance

The last principle is that of governance. Herein, Zhamak describes that some global/standardization needs to be enforced in the mesh, and what shall be left to the domains to decide. This group has a difficult job, as it needs to strike a balance between centralization and decentralization. In her second blogpost she has a table comparing centralized and data mesh governance.

Going Forward

The concept as introduced by Zhamak is a powerful one. Highlights for me are:

- The central data platform team(s) are attempting to solve an unsolvable problem. Providing trustworthy data to consumers without having any/limited domain knowledge themselves.

- Ownership of data is kept in the domains. Localizing the impact of changes.

- A self-service data platform enables teams to independently deploy data products. Changing the role of the central data platform team to the one which is developing a product, instead of providing data.

- Governance is maintained by defining the standardization of input/outputs. Allowing for uniform access to decentralized data.

However, it also leaves some additional questions that we will explore in the future:

- How small should a data product be? Could it be as small as a single SQL transformation?

- In the supervision plane, features like data quality monitoring, cataloging, access management should be implemented. But who should do this?

- Schema changes. How to deal with those? Will a breaking schema change lead to a new data product?

- Should alternative implementations of a data product (engine) be allowed? Or basically, what should you govern/decide centrally and what should you leave to the domains.